Parameters: - prevImg – first 8-bit input image or pyramid constructed by buildOpticalFlowPyramid().

- nextImg – second input image or pyramid of the same size and the same type as prevImg.

- prevPts – vector of 2D points for which the flow needs to be found; point coordinates must be single-precision floating-point numbers.

- nextPts – output vector of 2D points (with single-precision floating-point coordinates) containing the calculated new positions of input features in the second image; whenOPTFLOW_USE_INITIAL_FLOW flag is passed, the vector must have the same size as in the input.

- status – output status vector (of unsigned chars); each element of the vector is set to 1 if the flow for the corresponding features has been found, otherwise, it is set to 0.

- err – output vector of errors; each element of the vector is set to an error for the corresponding feature, type of the error measure can be set in flags parameter; if the flow wasn’t found then the error is not defined (use the status parameter to find such cases).

- winSize – size of the search window at each pyramid level.

- maxLevel – 0-based maximal pyramid level number; if set to 0, pyramids are not used (single level), if set to 1, two levels are used, and so on; if pyramids are passed to input then algorithm will use as many levels as pyramids have but no more than maxLevel.

- criteria – parameter, specifying the termination criteria of the iterative search algorithm (after the specified maximum number of iterations criteria.maxCount or when the search window moves by less than criteria.epsilon.

- flags –operation flags:

- OPTFLOW_USE_INITIAL_FLOW uses initial estimations, stored in nextPts; if the flag is not set, then prevPts is copied to nextPts and is considered the initial estimate.

- OPTFLOW_LK_GET_MIN_EIGENVALS use minimum eigen values as an error measure (seeminEigThreshold description); if the flag is not set, then L1 distance between patches around the original and a moved point, divided by number of pixels in a window, is used as a error measure.

- minEigThreshold – the algorithm calculates the minimum eigen value of a 2x2 normal matrix of optical flow equations (this matrix is called a spatial gradient matrix in [Bouguet00]), divided by number of pixels in a window; if this value is less than minEigThreshold, then a corresponding feature is filtered out and its flow is not processed, so it allows to remove bad points and get a performance boost.

| Parameters: |

|

|---|

Optical Flow

Goal

- In this chapter,

- We will understand the concepts of optical flow and its estimation using Lucas-Kanade method.

- We will use functions like cv2.calcOpticalFlowPyrLK() to track feature points in a video.

Optical Flow

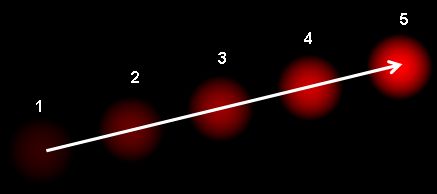

Optical flow is the pattern of apparent motion of image objects between two consecutive frames caused by the movemement of object or camera. It is 2D vector field where each vector is a displacement vector showing the movement of points from first frame to second. Consider the image below (Image Courtesy: Wikipedia article on Optical Flow).

It shows a ball moving in 5 consecutive frames. The arrow shows its displacement vector. Optical flow has many applications in areas like :

- Structure from Motion

- Video Compression

- Video Stabilization ...

Optical flow works on several assumptions:

- The pixel intensities of an object do not change between consecutive frames.

- Neighbouring pixels have similar motion.

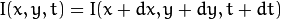

Consider a pixel  in first frame (Check a new dimension, time, is added here. Earlier we were working with images only, so no need of time). It moves by distance

in first frame (Check a new dimension, time, is added here. Earlier we were working with images only, so no need of time). It moves by distance  in next frame taken after

in next frame taken after  time. So since those pixels are the same and intensity does not change, we can say,

time. So since those pixels are the same and intensity does not change, we can say,

in first frame (Check a new dimension, time, is added here. Earlier we were working with images only, so no need of time). It moves by distance

in first frame (Check a new dimension, time, is added here. Earlier we were working with images only, so no need of time). It moves by distance  in next frame taken after

in next frame taken after  time. So since those pixels are the same and intensity does not change, we can say,

time. So since those pixels are the same and intensity does not change, we can say,

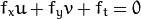

Then take taylor series approximation of right-hand side, remove common terms and divide by  to get the following equation:

to get the following equation:

to get the following equation:

to get the following equation:

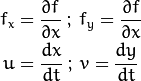

where:

Above equation is called Optical Flow equation. In it, we can find  and

and  , they are image gradients. Similarly

, they are image gradients. Similarly  is the gradient along time. But

is the gradient along time. But  is unknown. We cannot solve this one equation with two unknown variables. So several methods are provided to solve this problem and one of them is Lucas-Kanade.

is unknown. We cannot solve this one equation with two unknown variables. So several methods are provided to solve this problem and one of them is Lucas-Kanade.

and

and  , they are image gradients. Similarly

, they are image gradients. Similarly  is the gradient along time. But

is the gradient along time. But  is unknown. We cannot solve this one equation with two unknown variables. So several methods are provided to solve this problem and one of them is Lucas-Kanade.

is unknown. We cannot solve this one equation with two unknown variables. So several methods are provided to solve this problem and one of them is Lucas-Kanade.Lucas-Kanade method

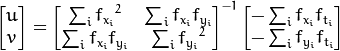

We have seen an assumption before, that all the neighbouring pixels will have similar motion. Lucas-Kanade method takes a 3x3 patch around the point. So all the 9 points have the same motion. We can find  for these 9 points. So now our problem becomes solving 9 equations with two unknown variables which is over-determined. A better solution is obtained with least square fit method. Below is the final solution which is two equation-two unknown problem and solve to get the solution.

for these 9 points. So now our problem becomes solving 9 equations with two unknown variables which is over-determined. A better solution is obtained with least square fit method. Below is the final solution which is two equation-two unknown problem and solve to get the solution.

for these 9 points. So now our problem becomes solving 9 equations with two unknown variables which is over-determined. A better solution is obtained with least square fit method. Below is the final solution which is two equation-two unknown problem and solve to get the solution.

for these 9 points. So now our problem becomes solving 9 equations with two unknown variables which is over-determined. A better solution is obtained with least square fit method. Below is the final solution which is two equation-two unknown problem and solve to get the solution.

( Check similarity of inverse matrix with Harris corner detector. It denotes that corners are better points to be tracked.)

So from user point of view, idea is simple, we give some points to track, we receive the optical flow vectors of those points. But again there are some problems. Until now, we were dealing with small motions. So it fails when there is large motion. So again we go for pyramids. When we go up in the pyramid, small motions are removed and large motions becomes small motions. So applying Lucas-Kanade there, we get optical flow along with the scale.

Lucas-Kanade Optical Flow in OpenCV

OpenCV provides all these in a single function, cv2.calcOpticalFlowPyrLK(). Here, we create a simple application which tracks some points in a video. To decide the points, we use cv2.goodFeaturesToTrack(). We take the first frame, detect some Shi-Tomasi corner points in it, then we iteratively track those points using Lucas-Kanade optical flow. For the function cv2.calcOpticalFlowPyrLK() we pass the previous frame, previous points and next frame. It returns next points along with some status numbers which has a value of 1 if next point is found, else zero. We iteratively pass these next points as previous points in next step. See the code below:

Dense Optical Flow in OpenCV

Lucas-Kanade method computes optical flow for a sparse feature set (in our example, corners detected using Shi-Tomasi algorithm). OpenCV provides another algorithm to find the dense optical flow. It computes the optical flow for all the points in the frame. It is based on Gunner Farneback’s algorithm which is explained in “Two-Frame Motion Estimation Based on Polynomial Expansion” by Gunner Farneback in 2003.

Below sample shows how to find the dense optical flow using above algorithm. We get a 2-channel array with optical flow vectors,  . We find their magnitude and direction. We color code the result for better visualization. Direction corresponds to Hue value of the image. Magnitude corresponds to Value plane. See the code below:

. We find their magnitude and direction. We color code the result for better visualization. Direction corresponds to Hue value of the image. Magnitude corresponds to Value plane. See the code below:

. We find their magnitude and direction. We color code the result for better visualization. Direction corresponds to Hue value of the image. Magnitude corresponds to Value plane. See the code below:

. We find their magnitude and direction. We color code the result for better visualization. Direction corresponds to Hue value of the image. Magnitude corresponds to Value plane. See the code below:

See the result below:

OpenCV comes with a more advanced sample on dense optical flow, please see samples/python2/opt_flow.py.

沒有留言:

張貼留言